Once you've used a tool like fslmaths, 3dcalc, or Marsbar to create a single ROI, you can combine several of these ROIs using the same tools. This might be useful, for example, when creating a larger-scale masks encompassing several different areas.

In each case, combining ROIs is simply a matter of creating new images using a calculator-like tool; think of your TI-83 from the good old days, minus those frustrating yet addictive games such as FallDown. (Personal record: 1083.) With fslmaths, use the -add flag to concatenate several different ROIs together, e.g.:

fslmaths roi1 -add roi2 -add roi3 outputfile

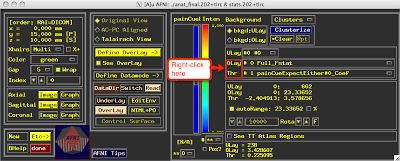

With AFNI:

3dcalc -a roi1 -b roi2 -c roi3 -expr '(a+b+c)' -prefix outputfile

With Marsbar is a bit more involved, but also easier since you can do it from the GUI, as shown in the following video.

Many thanks to alert reader Anonymous, who is both too cool to register a username and once scored a 1362 on FallDown. Now all you gotta do is lay back and wait for the babe stampede!

In each case, combining ROIs is simply a matter of creating new images using a calculator-like tool; think of your TI-83 from the good old days, minus those frustrating yet addictive games such as FallDown. (Personal record: 1083.) With fslmaths, use the -add flag to concatenate several different ROIs together, e.g.:

fslmaths roi1 -add roi2 -add roi3 outputfile

With AFNI:

3dcalc -a roi1 -b roi2 -c roi3 -expr '(a+b+c)' -prefix outputfile

With Marsbar is a bit more involved, but also easier since you can do it from the GUI, as shown in the following video.

Many thanks to alert reader Anonymous, who is both too cool to register a username and once scored a 1362 on FallDown. Now all you gotta do is lay back and wait for the babe stampede!