One complaint I have with FMRI tutorials and manuals is this: The user is provided a downloadable dataset and given a tutorial to generate a specific result. There is some commentary about different aspects of the analysis pipeline, and there might be a nod to artifacts that show up in the data. But for the most part things are expected to be more or less uneventful, and if anything goes wrong during the tutorial, it is likely because your fat fingers made a typo somewhere. God, you are fat.

Another thing: When you first read theory textbooks about fMRI data analysis, a few boogie men are mentioned, such as head motion or experimental design confounds. However, nothing is mentioned about technicians or RAs screwing stuff up, or (more likely) you yourself screwing stuff up. Not because you are fat, necessarily, but it doesn't help.

Bottom line: Nobody tells you how to respond when stuff goes wrong - really wrong - which it inevitably will.

No, I'm not talking about waking up feeling violated next to your frat brother; I'm talking about the millions of tiny things that can derail data acquisition or data analysis or both. This can end up costing your lab and the American taxpayer literally thousands - that's thousands, with a "T" - of dollars. No wonder the Tea Party is pissed off. And while you were hoping to get that result showing that conservatives/liberals exhibit abnormal neural patterns when shown pictures of African-Americans, and are therefore bigoted/condescending scum that deserve mandatory neural resocialization, instead you end up with a statistical map of blobs that looks like the frenetic finger-painting of a toddler tripping balls from Nutella overdose. How could this happen? Might as well go ahead and dump all seventy activation clusters in a table somewhere in the supplementary material where it will never see the light of day, and argue that the neural mechanisms of prejudice arise from the unfortunate fact that the entire brain is, indeed, active. (If this happens, just use the anodyne phrase "frontal-parietal-temporal-occipital network" to describe results like these. It works - no lie.)

How to deal with this? The best approach, as you learned in your middle school health class, is prevention. (Or abstinence. But let's get real, kids these days are going to analyze FMRI data whether we like it or not, the little minks.) Here are some prophylactic measures you can take to ensure that you do not get scalded by unprotected data analysis:

1) Plan your experiment. This seems intuitive, but you would be surprised how many imaging experiments get rushed out the door without a healthy dose of deliberative planning. This is because of the following reasons:

- You will probably get something no matter what you do.

- See reason #1

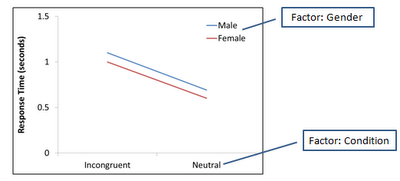

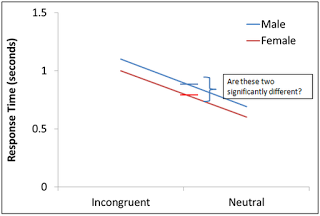

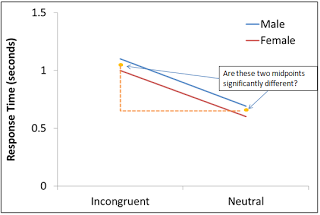

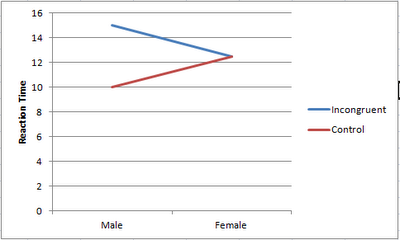

2) Run a behavioral pilot. Unless the neural mechanism or process is

entirely cognitive and therefore has no behavioral correlate (e.g., instructing the subject to fantasize about Nutella), try to obtain a performance measure of your conditions, such as reaction time. Doing this will also reinforce the previous point, which is to plan out your experiment. For example, the difference in reaction time between conditions can provide an estimate of how many trials you may need during your scanning session, and also lead to stronger hypotheses about what regions might be driving this effect.

3) Have a processing stream already in place before the data starts rolling in. After running your first pilot subject, have a script that extracts the data and puts everything in a neat, orderly file hierarchy. For example, create separate directories for your timing data and for your raw imaging data.

4) As part of your processing stream, use clear, understandable labels for each new analysis that you do. Suffixes such as "Last", "Final", "Really_The_Last_One", and "Goodbye_Cruel_World", although optimistic, can obscure what analysis was done when, and for what reason. This will protect you from disorganization, the bane of any scanning experiment.

5) Analyze the hell out of your pilot scan. Be like

psychopath federal agent Jack Bauer and relentlessly interrogate your subject. Was anything unclear? What did they think about the study, besides the fact that it was so boring and uncomfortable that instead of doing it again they would rather have a vasectomy with a weed-whipper? You may believe your study is the bomb, but unless the subject can actually do it, your study is about as useful as a grocery bag full of armpit hair.

6) Buy a new printer. Chicks dig guys with printers, especially printers that print photos.

|

| Your ticket to paradise |

7) Check the results of each step of your processing stream. After you've had some experience looking at brain images, you should have an intuition about what looks reasonable and what looks suspect. Knowing which step failed is critical for troubleshooting.

8) Know how to ask questions on the message boards. AFNI, SPM, and FSL all have excellent message boards and listservs that will quickly answer your questions. However, you should make your question clear, concise, and provide enough detail about everything you did until your analysis went catastrophically wrong. Moderators get pissed when questions are vague, whiny, or unclear.

9) When all else fails, blame the technicians. FMRI has been around for a while now, but the magnets are still extremely large and unwieldy, cost millions to build and maintain, and we

still can't get around the temporal resolution-spatial resolution tradeoff. Clearly, the physicists have failed us.

These are just a few pointers to help you address some of the difficulties and problems that waylay you at every turn. Obviously there are other dragons to slay once you have collected a good sample size and need to plan your interpretation and possible follow-up analysis. However, devoting time to planning your experiment and running appropriate behavioral studies can go a long way toward mitigating the suffering and darkness that follows upon our unhappy trade.